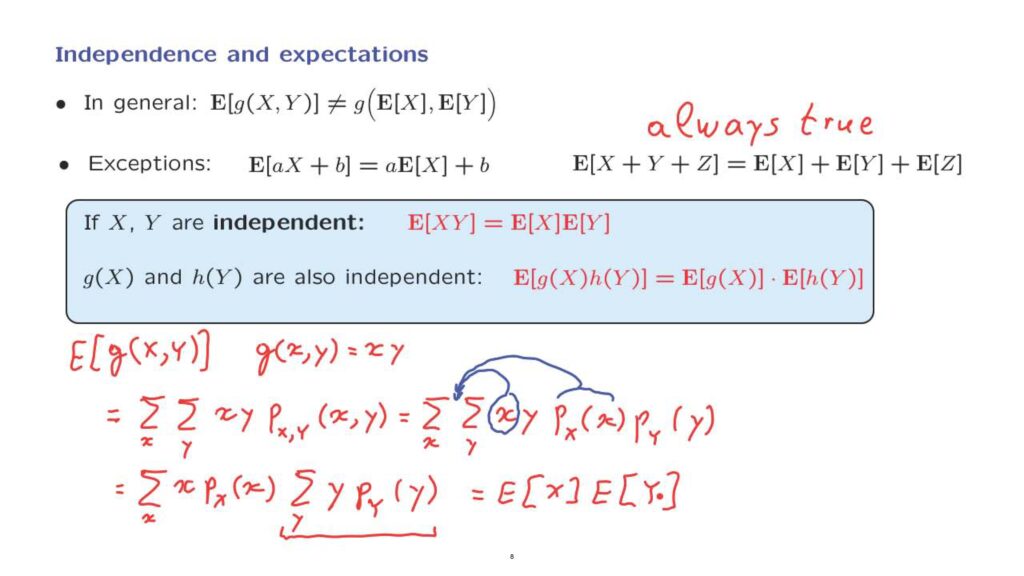

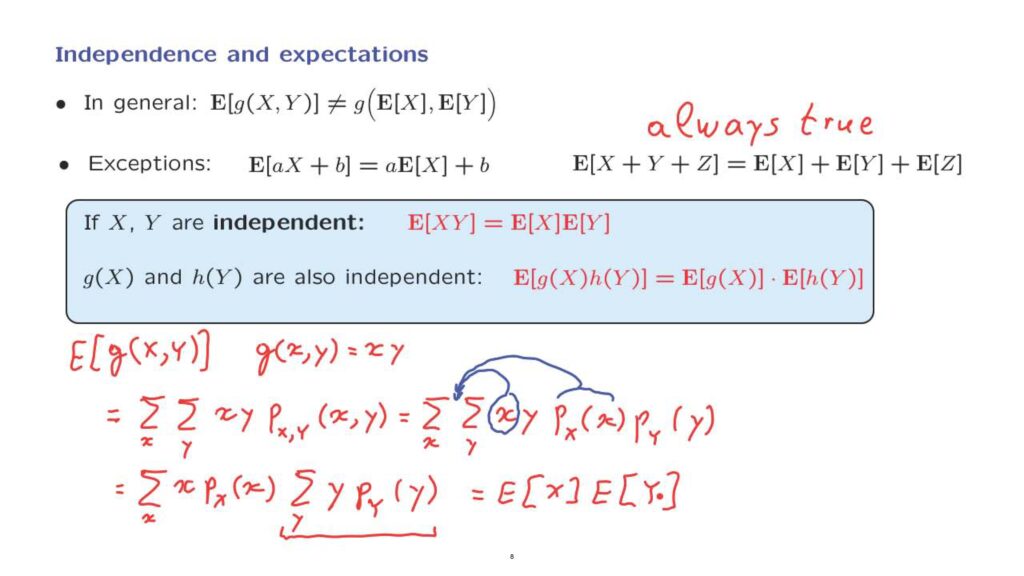

When we have independence, does anything interesting happen to expectations? We know that, in general, the expected value of a function of random variables is not the same as applying the function to the expected values.

And we also know that there are some exceptions where we do get equality.

This is the case where we are dealing with linear functions of one or more random variables.

Note that this last property is always true and does not require any independence assumptions.

When we have independence, there is one additional property that turns out to be true.

The expected value of the product of two independent random variables is the product of their expected values.

Let us verify this relation.

We are dealing here with the expected value of a function of random variables, where the function is defined to be the product function.

So to calculate this expected value, you can use the expected value rule.

And we are going to get the sum over all x, the sum over all y, of g of xy, but in this case, g of xy is x times y.

And then we weigh all those values according to the probabilities as given by the joint PMF.

Now, using independence, this sum can be changed into the following form– the joint PMF is the product of the marginal PMFs.

And now when we look at the inner sum over all values of y, we can take outside the summation those terms that do not depend on y, and so this term and that term.

And this is going to yield a summation over x of x times the marginal PMF of X, and then the summation over all y of y times the marginal PMF of Y.

But now we recognize that here we have just the expected value of Y.

And then we will be left with another expression, which is the expected value of X.

And this completes the argument.

Now, consider a function of X and another function of Y.

X and Y are independent.

Intuitively, the value of X does not give you any new information about Y, so the value of g of X does not to give you any new information about h of Y.

So on the basis of this intuitive argument, the functions g of X and h of Y are also independent of each other.

Therefore, we can apply the fact that we have already proved, but with g of X in the place of X and h of Y in the place of Y.

And this gives us this more general fact that the expected value of the product of two functions of independent random variables is equal to the product of the expectations of these functions.

We could also prove this property directly without relying on the intuitive argument.

We could just follow the same steps as in this derivation.

Wherever there is an X, we would write g of X, and wherever there is a Y, we would write h of Y.

And the same algebra would go through, and we would end up with the expected value of g of X times the expected value of h of Y.