We now come to a very important concept, the concept of independence of random variables.

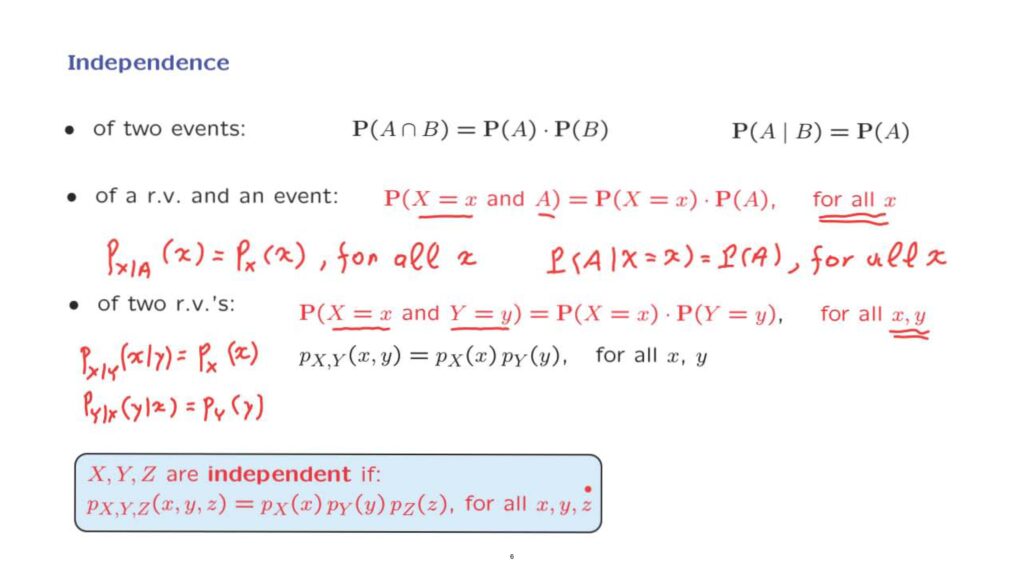

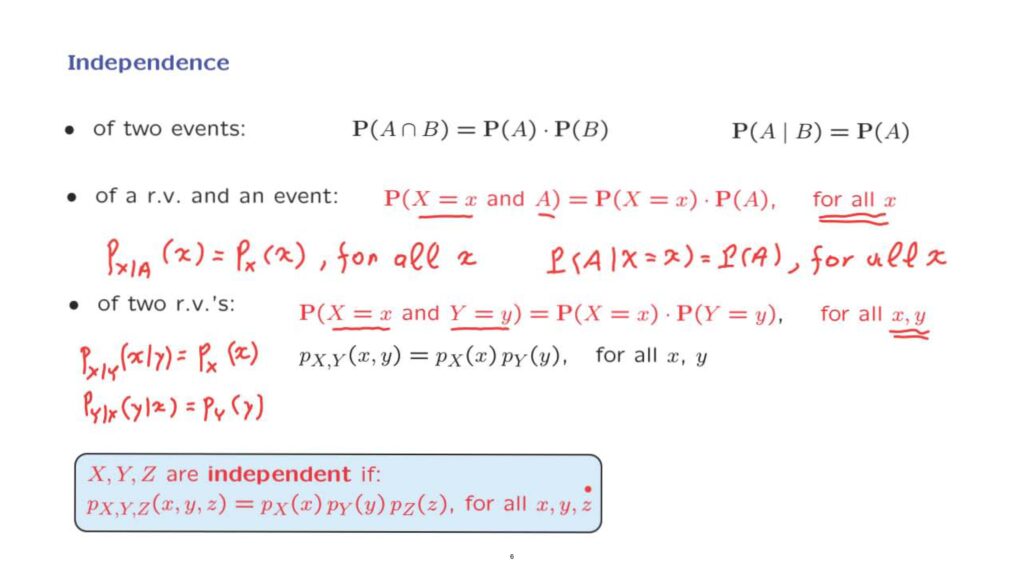

We are already familiar with the notion of independence of two events.

We have the mathematical definition, and the interpretation is that conditional probabilities are the same as unconditional ones.

Intuitively, when you are told that B occurred, this does not change your beliefs about A, and so the conditional probability of A is the same as the unconditional probability.

We have a similar definition of independence of a random variable and an event A.

The mathematical definition is that event A and the event that X takes on a specific value, that these two events are independent in the ordinary sense.

So the probability of both of these events happening is the product of their individual probabilities.

But we require this to be true for all values of little x.

Intuitively, if I tell you that A occurred, this is not going to change the distribution of the random variable x.

This is one interpretation of what independence means in this context.

And this has to be true for all values of little x, that is, when [the] event occurs, the probabilities of any particular little x [are] going to be the same as the original unconditional probabilities.

We also have a symmetrical interpretation.

If I tell you the value of X, then the conditional probability of event A is not going to change.

It’s going to be the same as the unconditional probability.

And again, this is going to be the case for all values of X.

So, no matter what they tell you about X, your beliefs about A are not going to change.

We can now move and define the notion of independence of two random variables.

The mathematical definition is that the event that X takes on a value little x and the event that Y takes on a value little y, these two events are independent, and this is true for all possible values of little x and little y.

In PMF notation, this relation here can be written in this form.

And basically, the joint PMF factors out as a product of the marginal PMFs of the two random variables.

Again, this relation has to be true for all possible little x and little y.

What does independence mean? When I tell you the value of y, and no matter what value I tell you, your beliefs about X will not change.

So that the conditional PMF of X given Y is going to be the same as the unconditional PMF of X.

And this has to be true for any values of the arguments of these PMFs.

There is also a symmetric interpretation, which is that the conditional PMF of Y given X is going to be the same as the unconditional PMF of Y.

We have the symmetric interpretation because, as we can see from this definition, X and Y have symmetric roles.

Finally, we can define the notion of independence of multiple random variables by a similar relation.

Here, the definition is for the case of three random variables, but you can imagine how the definition for any finite number of random variables will go.

Namely, the joint PMF of all the random variables can be expressed as the product of the corresponding marginal PMFs.

What is the intuitive interpretation of independence here? It means that information about some of the random variables will not change your beliefs, the probabilities, about the remaining random variables.

Any conditional probabilities and any conditional PMFs will be the same as the unconditional ones.

In the real world, independence models situations where each of the random variables is generated in a decoupled manner, in a separate probabilistic experiment.

And these probabilistic experiments do not interact with each other and have no common sources of uncertainty.