We have already seen an example in which we have two events that are independent but become dependent in a conditional model.

So that [independence] and conditional independence is not the same.

We will now see another example in which a similar situation is obtained.

The example is as follows.

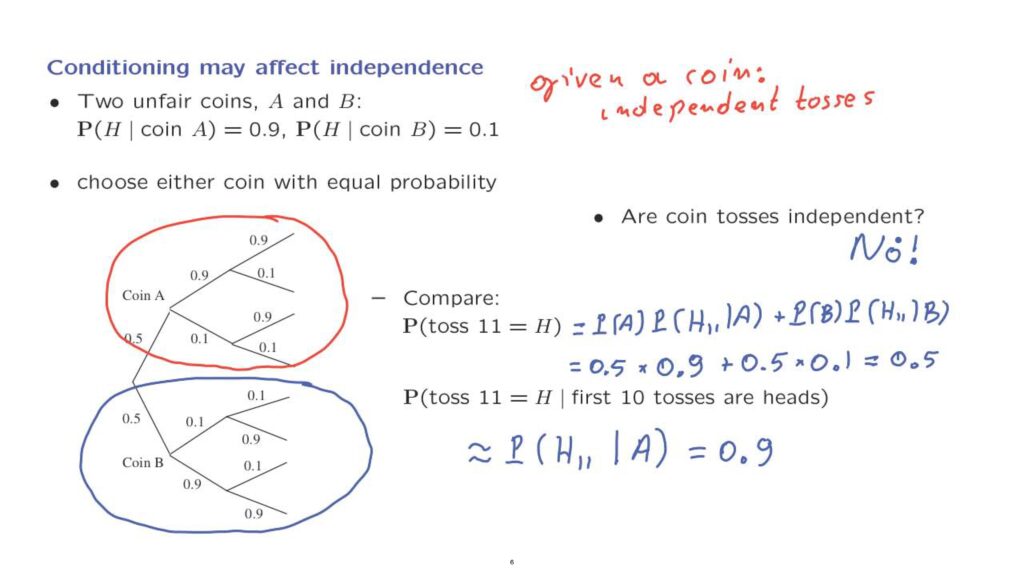

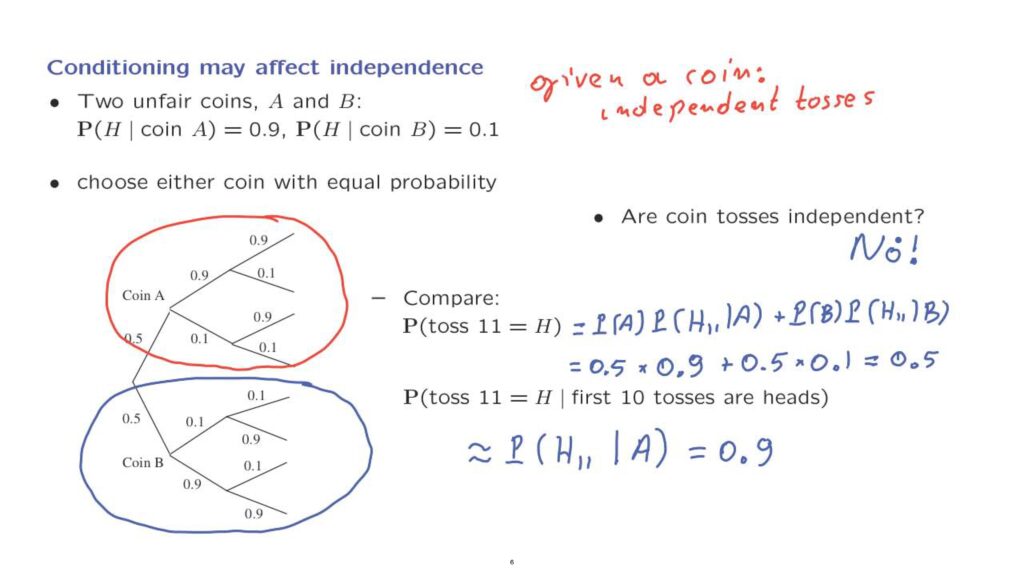

We have two possible coins, coin A and coin B.

This is the model of the world given that coin A has been chosen.

So this is a conditional model given that we have in our hands coin A.

In this conditional model, the probability of heads is 0.9.

And, moreover, the probability of heads is 0.9 in the second toss no matter what happened in the first toss and so on as we continue.

So given a particular coin, we assume that we have independent tosses.

This is another way of saying that we’re assuming conditional independence.

Within this conditional model, coin flips are independent.

And the same assumption is made in the other possible conditional universe.

This is a universe in which we’re dealing with coin B.

Once more, we have, conditionally independent tosses.

And this time, the probability of heads at each toss is 0.1.

Suppose now that we choose one of the two coins.

Each coin is chosen with the same probability, 0.5.

So we’re equally likely to obtain this coin– and then start flipping it over and over– or that coin– and start flipping it over and over.

The question we will try to answer is whether the coin tosses are independent.

And by this, we mean a question that refers to the overall model.

In this general model, are the different coin tosses independent? Where you do not know ahead of time which coin is going to be.

We can approach this question by trying to compare conditional and unconditional probabilities.

That’s what independence is about.

Independence is about certain conditional probabilities being the same as the unconditional probabilities.

So this here, this comparison here is essentially the question of whether the 11th coin toss is dependent or independent from what happened in the first 10 coin tosses.

Let us calculate these probabilities.

For this one, we use the total probability theorem.

There’s a certain probability that we have coin A, and then we have the probability of heads in the 11th toss given that it was coin A.

There’s also a certain probablility that it’s coin B and then a conditional probability that we obtain heads given that it was coin B.

We use the numbers that are given in this example.

We have 0.5 probability of obtaining a particular coin, 0.9 probability of heads for coin A, 0.5 probability that it’s coin B, and 0.1 probability of heads if it is indeed coin B.

We do the arithmetic, and we find that the answer is 0.5, which makes perfect sense.

We have coins with different biases, but the average bias is 0.5.

If we do not know which coin it’s going to be, the average bias is going to be 0.5.

So the probability of heads in any particular toss is 0.5 if we do not know which coin it is.

Suppose now that someone told you that the first 10 tosses were heads.

Will this affect your beliefs about what’s going to happen in the 11th toss? We can calculate this quantity using the definition of conditional probabilities, or the Bayes’ rule, but let us instead think intuitively.

If it is coin B, the events of 10 heads in a row is extremely unlikely.

So if I see 10 heads in a row, then I should conclude that there is almost certainty that I’m dealing with coin A.

So the information that I’m given tells me that I’m extremely likely to be dealing with coin A.

So we might as well condition on this equivalent information that it is coin A that I’m dealing with.

But if it is coin A, then the probability of heads is going to be equal to 0.9.

So the conditional probability is quite different from the unconditional probability.

And therefore, information on the first 10 tosses affects my beliefs about what’s going to happen in the [11th] toss.

And therefore, we do not have independence between the different tosses.